After my positive experiences integrating DeepSeek v3.1 and GLM-4.5 with Claude Code, I was excited to try another contender: Qwen3-Coder-Plus from Alibaba Cloud. The media hype around Qwen’s coding capabilities had built up high expectations, and I was genuinely hoping to find another solid alternative to add to my toolkit. Unfortunately, what followed was one of the most frustrating AI coding experiences I’ve had.

The Setup: Promising Start with Free Tokens

The integration process began on a positive note. Following the official documentation for integrating Qwen3-Coder-Plus with Claude Code, the setup was straightforward, following the familiar Anthropic API compatibility pattern that worked so well with DeepSeek and GLM:

- API Key Setup: Created an API key through Alibaba Cloud’s Bailian console

- Free Tier: Received 1 million free tokens as a welcome bonus

- Configuration: Set up the environment variables similar to other Anthropic-compatible models

The initial setup was smooth, and the promise of 1 million free tokens seemed generous - more than enough for thorough testing.

However, I quickly discovered that Alibaba Cloud’s Bailian platform interface is remarkably unintuitive. Basic settings that should be straightforward, like finding the option to disable “stop service when free quota is exhausted” or monitoring token usage in real-time, require unnecessary digging through multiple menus and settings pages. The platform’s poor user experience design makes even simple administrative tasks frustratingly difficult.

The First Red Flag: Reading the Wrong Files

I started my test by opening a frontend project directory and asking Qwen3-Coder-Plus to analyze the project’s main functionality. This is exactly the type of task where DeepSeek v3.1 and GLM-4.5 typically consume around 200K tokens and provide comprehensive analysis within minutes.

However, I immediately noticed something was wrong. Instead of focusing on the source code, Qwen3-Coder-Plus began obsessively reading compiled JavaScript and CSS files - the output of npm builds. These were minified, processed files that provide no meaningful insight into the project’s architecture or functionality. A competent coding model should immediately recognize that:

node_modules/contains dependencies, not source codedist/orbuild/directories contain compiled assets- Source code typically lives in

src/,lib/, or similar directories

I could see the model reading through these useless files, and I was starting to get concerned about both the direction of the analysis and the token consumption. But before I could intervene, errors started appearing. The message indicated that my free quota was exhausted, which seemed impossible given that the model had only been reading irrelevant files.

The Second Red Flag: Free Tier Limitations

This led me to discover a crucial detail: Alibaba Cloud defaults to stopping service when free quotas are exhausted. To continue the interrupted analysis, I had to manually disable the “stop service when free quota is exhausted” feature in the Bailian console, switching to pay-per-token pricing, and then type “continue” to resume the session from where it left off.

The pricing structure itself seemed reasonable at first glance, according to the official pricing documentation:

- 0-32K tokens: ¥0.004 per 1K input tokens, ¥0.016 per 1K output tokens

- 32K-128K tokens: ¥0.006 per 1K input tokens, ¥0.024 per 1K output tokens

- 128K-256K tokens: ¥0.01 per 1K input tokens, ¥0.04 per 1K output tokens

- 256K-1M tokens: ¥0.02 per 1K input tokens, ¥0.2 per 1K output tokens

The Disaster: What Happened After I Pressed Continue

After resuming the session with pay-per-token mode enabled, the situation took a turn for the worse. Unlike before, I could no longer see which files the model was reading - the interface became opaque. All I could tell was that the model seemed to be stuck in some kind of loop, consuming tokens without any visible progress.

The Black Box of Token Consumption

While previously I could at least see what files were being read (even if they were the wrong ones), after pressing “continue”, the process became a complete black box. There was no transparency about what the model was doing, just the unsettling feeling that tokens were being consumed at an alarming rate without any meaningful output.

Growing Suspicion and Forced Exit

After what felt like an eternity with no progress updates, I became increasingly suspicious that the model was trapped in some kind of infinite loop, possibly still reading the same meaningless compiled files over and over again. With no way to monitor what was happening and tokens being consumed at an unknown rate, I made the difficult decision to terminate the session.

The Breaking Point: Shocking Bill and Token Discrepancy

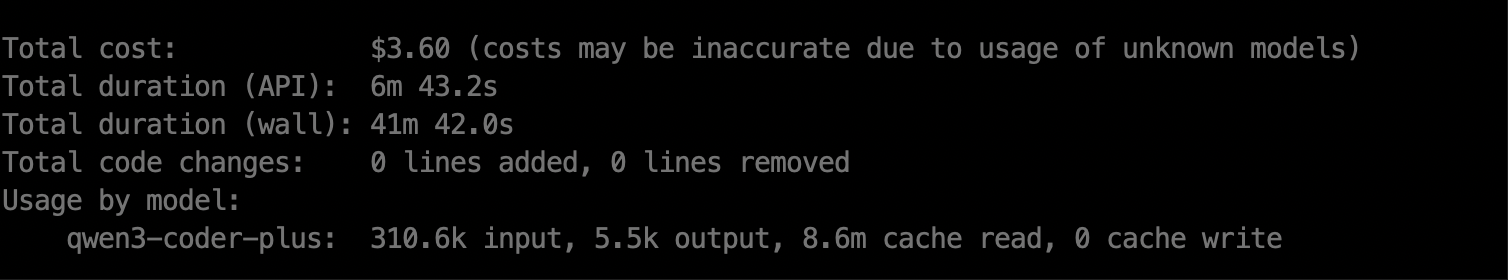

When I finally exited Claude Code, the token consumption summary was as following:

Over 316K tokens consumed with absolutely no useful output. However, the real shocker came later when I dug deeper into the Bailian platform’s usage statistics. The actual numbers were beyond comprehension:

Let that sink in. While Claude Code reported around 316K tokens, Alibaba Cloud’s system shows I consumed over 12.4 million input tokens - that’s nearly 40 times more than what the client indicated! This isn’t just a minor billing discrepancy; it’s a catastrophic failure in token counting that makes any form of cost control or budget planning completely impossible.

For comparison, similar tasks with DeepSeek v3.1 or GLM-4.5 typically consume around 200K tokens AND provide meaningful analysis.

The Alarming Aftermath: Delayed Billing Notifications

The final straw came about 20 minutes later. I received an SMS and email from Alibaba Cloud informing me that my account had a negative balance of ¥11.17 (about $1.50 USD). The message warned that if I didn’t recharge, my service “would” be suspended at some point in the past.

Yes, you read that correctly - the billing notification was not only delayed but also contained grammatical errors and referred to suspension times that had already passed. This level of unprofessionalism in a cloud platform’s billing system is deeply concerning.

The Final Bill: 34 Hours Later

But the real shock came 34 hours later when I received another email from Alibaba Cloud - my account was now showing a total charge of ¥58.38 (approximately $8 USD). Let that sink in: I ran this model for a single session, didn’t write a single line of code, just analyzed a frontend project, and it consumed enough tokens to rack up an $8 bill.

I’m incredibly fortunate that I terminated the session when I did. If I had let it continue running, this could easily have become $80, $800, or even $8000 in charges. I don’t know how much Alibaba spends on marketing or pays for positive reviews, but I can say with certainty: I will never use this garbage model again.

The Comparison: How Others Handle the Same Task

To put this failure in perspective, let me compare how different models handled the exact same task:

- Gemini 2.5 Pro: ~200K tokens, comprehensive analysis in 2-3 minutes

- DeepSeek v3.1: ~200K tokens, thorough understanding with good explanations

- GLM-4.5: ~200K tokens, solid analysis with fast response times

- Qwen3-Coder-Plus: 12.4M tokens, no useful output, stuck reading compiled files

The difference isn’t just marginal - it’s catastrophic. Qwen3-Coder-Plus consumed 40 times more tokens than the alternatives while delivering zero value.

The Core Problems: Why Qwen3-Coder-Plus Fails

Based on this experience, I’ve identified several fundamental issues with Qwen3-Coder-Plus:

1. Poor Code Comprehension

The model lacks basic understanding of project structure. It cannot distinguish between source code and compiled assets, a fundamental skill for any coding AI.

2. Inefficient Token Usage

The model seems to read files indiscriminately without a strategy for identifying relevant information. This leads to massive token waste.

3. Catastrophic Token Billing Discrepancy

The most alarming discovery was the astronomical difference between what Claude Code reported (316K tokens) and what Alibaba Cloud’s Bailian platform actually consumed (12.4 million tokens). This isn’t just a minor discrepancy - it’s a 40x difference that makes any form of cost control completely impossible. The platform’s token counting system appears to be fundamentally broken, creating a massive risk for users who could face unexpected charges without any way to monitor or predict costs.

4. Looping Behavior

The model appears prone to getting stuck in reading loops, consuming tokens without making progress.

5. Unreliable Service and Poor Platform Design

The combination of free tier limitations, delayed billing notifications, and a counter-intuitive platform interface creates a dangerous user experience. Basic administrative tasks require unnecessary effort, and the lack of real-time monitoring capabilities makes it impossible to track usage effectively.

6. Poor Model Self-Awareness

Perhaps the most revealing part of this experience was my attempt to get help from Qwen itself. I went to tongyi.com and used the Qwen3 Plus model to ask how to integrate Qwen3-Coder-Plus with Claude Code. The response was shocking - the model insisted that Claude Code was a model itself and couldn’t be integrated with another model.

Even after enabling deep thinking mode, it maintained that Qwen3-Coder-Plus and Claude Code were completely separate models that couldn’t be integrated. When I explained that Claude Code is a command-line programming tool, it still provided meaningless nonsense about their differences and insisted they couldn’t work together. This fundamental lack of understanding about their own product’s integration capabilities is deeply concerning.

The Broader Implications

This experience raises serious concerns about using Qwen3-Coder-Plus in production environments:

- Cost Risk: The potential for runaway token consumption could lead to unexpected charges

- Billing Reliability: Delayed notifications make it impossible to monitor costs in real-time

- Service Quality: The model’s inability to perform basic coding tasks makes it unsuitable for professional development

My Recommendation: Avoid Qwen3-Coder-Plus

After this extensive testing, I cannot recommend Qwen3-Coder-Plus for any serious development work. The combination of poor performance, inefficient token usage, and unreliable billing creates a product that’s not just subpar - it’s potentially harmful to use.

For developers looking for cost-effective AI coding assistants, I’d strongly recommend:

- DeepSeek v3.1 for reliable performance and reasonable pricing

- GLM-4.5 for generous free tiers and subscription options

- Gemini 2.5 Pro for those with access to Google’s ecosystem

A Silver Lining: The Test Account Strategy

If there’s one positive takeaway from this experience, it’s the wisdom of using a separate test account for evaluating new AI services. I’m incredibly grateful that I didn’t conduct this test with my production cloud account - the billing delays and potential for runaway costs could have been much more damaging.

Conclusion: Media Hype vs. Reality

Qwen3-Coder-Plus serves as a stark reminder that media hype doesn’t always reflect real-world performance. While the model may have shown promise in controlled demonstrations, my practical experience revealed fundamental flaws that make it unsuitable for professional development work.

The AI coding assistant space is competitive, and users have excellent alternatives that deliver reliable performance without the risks and frustrations I encountered with Qwen3-Coder-Plus. Until Alibaba Cloud addresses these core issues, I’d advise developers to look elsewhere for their AI coding needs.

For now, I’ll be sticking with DeepSeek v3.1 and GLM-4.5 for my Claude Code integration needs. They may not be perfect, but they deliver consistent results without the drama and disappointment that characterized my Qwen3-Coder-Plus experience.